Why banning surveillance advertising is the regulatory wedge that could actually work

Picture Mark Zuckerberg sitting in front of the Senate Judiciary Committee, April 10th 2018, looking like a confused android trying to process human emotion. For hours, senators lobbed questions at him. Some informed, most painfully not. Then Senator Orrin Hatch, 84 years old and clearly struggling with the concept of “the internet,” asked what might be the most accidentally perfect question of the hearing:

“If [a version of Facebook will always be free], how do you sustain a business model in which users don’t pay for your service?”

Zuckerberg’s response, delivered with all the charisma of a malfunctioning printer: “Senator, we run ads.”

Four words. That’s it. Four words that explain everything.

But here’s what nobody asked as a follow-up: What kind of ads?

Because that’s where the fascism lives.

Part 1: The Original Sin (1996-2010)

The 1996 Setup

In 1996, the internet was baby. AOL was sending CDs in the mail like digital missionaries spreading the gospel of “You’ve Got Mail.” Netscape Navigator was cutting-edge technology. And Congress was in a full-blown moral panic because teenagers might see boobs online.

Parents across America were terrified. Their kids, innocent, pure children; could access pornography from the family computer. The horror. The scandal. Someone had to do something.

So Congress did what Congress does: they passed a law.

The Communications Decency Act of 1996, tucked into the larger Telecommunications Act, was designed to protect children from the corrupting influence of online smut. It criminalized transmitting “obscene or indecent” material to minors and threatened violators with fines and prison time. The intention was noble, if deeply paternalistic. The execution was a constitutional disaster.

But buried in this legislation, almost as an afterthought, was Section 230.

Here’s the actual text, all twenty-six words that would eventually break democracy:

“No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”

That’s it. That’s the whole thing.

The logic was sound for 1996. If you ran an online bulletin board or chat room, you couldn’t possibly review every single message users posted. The internet was growing fast. Too fast for human moderation at scale. If platforms were held legally liable for everything their users said, nobody would host user-generated content. The internet would remain a read-only medium, controlled by institutions, defeating the whole promise of democratized communication.

Section 230 said: Publishers are liable for content they create. Platforms are not liable for content their users create. It created a safe harbor. Room to experiment. Space to build without the paralyzing fear of lawsuits over every random asshole’s forum post.

It worked. Maybe too well.

The rest of the Communications Decency Act? The Supreme Court struck it down in 1997 as unconstitutional. A violation of the First Amendment. Turns out you can’t criminalize “indecent” speech online any more than you can criminalize it in books or newspapers.

But Section 230 survived. The Court determined it was “severable” from the rest of the law. So it stayed on the books, quietly governing the internet’s liability framework while everything around it evolved at light speed.

And for a while, for that brief, shining moment in the late ’90s and early 2000s; it seemed to be working exactly as intended.

The Utopian Dream vs. The Reality

Remember the optimism?

The internet was going to change everything. Democratize knowledge. Break down barriers. Connect humanity in ways previously unimaginable. We’d have access to all human wisdom at our fingertips. Information would flow freely across borders. Dictatorships would crumble under the weight of networked citizens. Democracy would flourish.

“The internet interprets censorship as damage and routes around it,” declared John Gilmore, one of the early internet pioneers. The architecture itself; decentralized, distributed, resistant to control; would guarantee freedom.

We were building a global village.

We were creating digital town squares where ideas could be debated openly.

We were ushering in an age of enlightenment.

Turns out we were building something else entirely.

Here’s what we actually got:

Echo chambers instead of dialogue. Algorithms learned that people prefer information confirming what they already believe, so they served it up endlessly. Why challenge your worldview when you can have it reinforced 10,000 times a day?

Radicalization pipelines instead of education. YouTube’s recommendation algorithm discovered that conspiracy theories drive engagement, so it funneled users from “moon landing documentary” to “moon landing hoax” to “the government is run by lizard people” in three clicks. Efficient!

Tribal warfare instead of a global village. Connecting everyone didn’t create understanding. It created infinite opportunities for people to find their tribe and declare war on everyone else’s tribe. Turns out proximity doesn’t equal empathy.

**Misinformation/Disinformation** spreading faster than truth. Research shows false news spreads six times faster on Twitter than accurate news, not because of bots, but because we spread it. Lies are more emotionally engaging than truth, and engagement is all that matters.

Surveillance instead of freedom. Every click tracked. Every pause measured. Every scroll catalogued. The internet didn’t free us from observation, it made observation ubiquitous, corporate, and profitable.

The global village became a global dumpster fire.

And we’re all standing around it, watching our phones.

When Advertising Became Weaponized

Here’s where it goes from “disappointing” to “civilization-threatening.”

For most of internet history, advertising worked the same way it had worked for decades in print and broadcast media: contextually. You bought ad space based on the content surrounding it. Car ad in a car magazine. Beer ad during the Super Bowl. Luxury watch ad in The New Yorker. The advertiser paid for access to an audience likely to be interested in their product based on what that audience was reading or watching.

This model made sense. It was transparent. Everyone understood it. And critically, it didn’t require building detailed psychological profiles of individual people.

Then Google and Facebook changed everything.

Instead of “Here’s an ad for people reading articles about cars,” the pitch became: “Here’s an ad specifically for you, based on everything we know about you.”

Which sounds convenient… targeted ads mean fewer irrelevant ads, right? Who wants to see tampon ads if you don’t menstruate, or lawn care ads if you live in an apartment?

But here’s what actually happened: Google and Facebook didn’t just change how ads were served. They changed what was being sold.

The product isn’t ad space anymore.

The product is you.

Your attention. Your behavior. Your psychological vulnerabilities. Your predictability.

Advertisers don’t buy “ad impressions on Facebook.” They buy access to specific types of humans exhibiting specific types of behavior, identified through specific patterns of data.

“Show my ad to women aged 25-34 who recently got engaged, live in urban areas, have expressed interest in fitness, and exhibit purchasing behavior consistent with disposable income above $75k” isn’t a demographic guess. It’s a precision strike based on surveillance.

And it gets worse.

Because once you’re in the business of predicting and influencing behavior, you’re incentivized to maximize the behavior you’re predicting. Keep people scrolling. Keep people clicking. Keep people engaged.

The algorithm doesn’t care if you’re engaged because you’re learning something valuable or because you’re hate-reading something that makes your blood boil. Engagement is engagement. And rage is the most reliable engagement driver that exists.

So the platforms optimized for rage.

This is when advertising stopped being advertising and became psychological operations as a service.

Shoshana Zuboff, author of The Age of Surveillance Capitalism, calls it “behavioral futures markets”—platforms aren’t just predicting what you’ll do, they’re shaping what you’ll do, and selling that influence to the highest bidder.

It’s not “Here’s a product you might like.”

It’s “Here’s a psychological intervention designed to change your behavior, delivered at the exact moment you’re most vulnerable to it, calibrated specifically to your individual weaknesses.”

And anyone with a credit card can buy it.

Foreign adversaries. Domestic extremists. Scam artists. Political campaigns. Corporations. Whoever.

The infrastructure for mass manipulation exists. It’s legal. And it’s for sale.

That’s what Zuckerberg meant when he said “Senator, we run ads.”

So how does this actually work? What does “surveillance advertising” mean in practice, beyond vague concerns about “data collection”?

Let me show you the machine. Let me show you exactly what’s being collected, how it’s being used, and why calling it “advertising” is like calling a predator drone a “delivery service.”

Because once you see it clearly, you can’t unsee it.

Part 2: Surveillance Advertising Explained

The Business Model in Plain English

If you’re not paying for the product, you are the product.

You’ve heard this before. It’s become a cliché, right up there with “read the terms and conditions” and “clear your browser history.” We nod along, vaguely uncomfortable, and then we keep scrolling because what else are we going to do?

But do you actually understand what it means?

Let me be specific.

You are not the customer. Advertisers are the customers. You are the inventory. Your attention is the product being sold. But it’s not just your attention in aggregate… it’s you, specifically. Your habits. Your fears. Your desires. Your weaknesses.

Here’s the actual transaction happening every time you open Facebook, Instagram, TikTok, or Google:

The platform collects data about you; what you click, what you pause on, what you scroll past, what you share, what you search, who you message, where you go, what you buy. All of it.

That data gets fed into machine learning models that build a psychological profile of you. Not just demographics (age, gender, location), but *psychographics…* your personality, your values, your vulnerabilities, your triggers.

Then the platform sells access to that profile. Not the data itself (usually), but the ability to reach you with messages calibrated to manipulate your specific psychology.

An advertiser doesn’t buy “1,000 impressions on Facebook.” They buy “access to 1,000 users who exhibit behavioral patterns consistent with high purchase intent for luxury goods, emotional vulnerability related to body image, and susceptibility to urgency-based messaging.”

That’s not advertising. That’s psychological targeting.

And the business model demands it get more precise, more invasive, more effective over time. Because shareholders demand growth. And growth means more data, more engagement, more manipulation, more profit.

It’s not sustainable. It’s extractive. And it’s everywhere.

The Psychological Exploitation

They’re not showing you products. They’re A/B testing what breaks your brain.

Here’s what Facebook, Google, TikTok, and every other attention merchant figured out: emotion drives engagement. Not curiosity. Not interest. Emotion. Specifically, the ugly ones.

Rage drives clicks. Fear drives clicks. Outrage drives clicks. Moral indignation drives clicks. Schadenfreude drives clicks.

You know what doesn’t drive clicks? Calm, nuanced discussion. Thoughtful analysis. Moderate positions. Boring facts.

So the algorithm learned to serve you the stuff that makes you feel something intense. Because intensity equals engagement, and engagement equals ad impressions, and ad impressions equal money.

The platforms will tell you the algorithm is neutral, it’s just showing you “content you’re interested in” based on your behavior. But that’s a lie of omission. The algorithm doesn’t care if you’re interested in something because it’s valuable or because it makes you furious. It only cares that you clicked.

And here’s the sick genius of it: they’ve gamified your anger.

Every time you engage with something; like, comment, share, even hate-read, you’re training the algorithm. You’re teaching it what keeps you on the platform. And the algorithm learns fast.

Want to know why your uncle went from “moderate conservative” to “QAnon believer” in eighteen months? This is how. The algorithm discovered that conspiracy content kept him scrolling, so it served him more. Then more extreme versions. Then even more extreme. Each click teaching the machine: yes, more like this.

It’s called a recommendation algorithm. It should be called a radicalization pipeline.

The platforms hire PhDs in behavioral psychology and neuroscience to design these systems. They use the same techniques that make slot machines addictive: variable reward schedules, intermittent reinforcement, dopamine exploitation.

You pull the lever (scroll). Sometimes you get a reward (funny meme, infuriating post, validation from a “like”). Sometimes you don’t. But you keep pulling, because your brain is wired to respond to that pattern.

It’s not an accident. It’s not a side effect. It’s the design.

Tristan Harris, former Google design ethicist turned whistleblower, calls it “human downgrading.” We’re not users of these platforms. We’re lab rats in Skinner boxes, and the boxes are getting more sophisticated every day.

And it works. Bob help us, it works.

The Data Nightmare

Let’s talk about what they actually know about you.

Not what they might know. Not hypotheticals. What they actually collect and what they actually infer from that collection.

What They Collect

Location data. Everywhere you go, every day, all day. Your home address (where you are every night). Your work address (where you are every weekday). The gym you go to. The bars you frequent. The protests you attend. The clinics you visit. Google Maps tracks you even when you’re not actively using it. Facebook tracks you through other apps. Your phone is a tracking device that occasionally makes calls.

Purchase history. What you buy, where you buy it, how much you spend, how often you return things. Credit card companies sell this data to data brokers, who sell it to platforms. They know if you’re financially stable or desperate. They know if you have expensive taste or shop discount. They know if you’re buying baby supplies or alcohol or guns or sex toys.

Browsing history. Every website you visit. Every article you read. Every product you look at but don’t buy. Every video you watch. How long you stay on each page. What you scroll past. What you click. Facebook has tracking pixels on millions of websites. You don’t have to be on Facebook for Facebook to be watching you.

Messages. Yes, they scan your messages. Not necessarily humans reading them, but algorithms analyzing sentiment, content, relationships, patterns. WhatsApp claims end-to-end encryption, but metadata still leaks: who you talk to, when, how often, message length. Telegram, Discord, Slack; most of these are scanning content for ad targeting.

Photos. Facial recognition. Not just “who’s in this photo” but “where was this taken, what were they doing, who else was there.” Background analysis. Metadata from your camera. Instagram knows if you’re at the beach or a protest, at a wedding or a divorce lawyer’s office.

Relationships. Your social graph. Who you’re connected to, who you interact with, who you’ve cut off. They can infer familial relationships, romantic relationships, work relationships, political affiliations based on who you engage with. If your friends are all posting about pregnancy, the algorithm infers you might be trying to conceive. If your network suddenly shifts political, they notice.

Health data. Period tracking apps. Fitness trackers. Search queries about symptoms. Pharmacy purchases. Mental health app usage. They know if you’re depressed before your doctor does. They know if you’re pregnant before you tell your family. They know if you’re struggling with addiction.

Audio. Your phone listens. Not constantly (probably), but enough. Voice assistants like Alexa and Siri record audio and send it to servers for processing. Sometimes those recordings get reviewed by humans. Sometimes they get hacked. Always they get analyzed.

Everything.

What They Infer

The collected data is just raw material. The real horror is what they infer from it.

They don’t just know you searched for “depression symptoms.” They infer your mental health status, your likelihood of self-harm, your vulnerability to predatory ads.

They don’t just know you bought pregnancy tests. They infer your relationship status, your financial stress, your religious beliefs, whether you’re likely to carry to term or seek an abortion.

They don’t just know you attend church. They infer your political leanings, your stance on social issues, your susceptibility to moral appeals, your likelihood to donate to certain causes.

They build a model of you. Not the you that you present to the world… the real you, the one you hide from your spouse, your therapist, yourself. The you that clicks on things at 2 AM when your guard is down. The you that googles things you’d never say out loud. Basically, they know more about you than you do.

And then they sell access to that you.

What They Sell

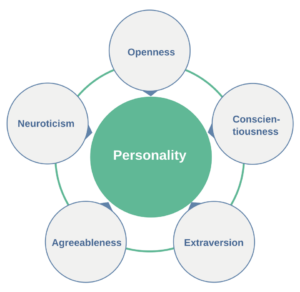

When Cambridge Analytica harvested 87 million Facebook profiles, they weren’t just grabbing names and emails. They were grabbing psychological profiles based on the “Big Five” personality traits: openness, conscientiousness, extraversion, agreeableness, neuroticism. OCEAN

They used those profiles to determine which voters were persuadable and what messages would persuade them. Not generic political ads, individualized psychological interventions.

Neurotic? Here’s a fear-based message. High openness? Here’s an appeal to change. Low agreeableness? Here’s an enemy to hate.

It worked. Not perfectly, not in every case, but well enough. Well enough that Trump’s campaign paid them millions. Well enough that multiple countries hired them for election interference. Well enough that they became a verb, “to Cambridge Analytica someone.”

And here’s the thing: Cambridge Analytica was just using Facebook’s tools as designed. They didn’t hack anything. They didn’t break in. They used the platform’s API, paid for by Facebook’s ad system, built explicitly for this kind of targeting.

Facebook didn’t see a problem with it until journalists did.

The Shadow Economy

And Facebook isn’t alone in this. There’s an entire industry you’ve never heard of: data brokers.

Companies like Acxiom, Epsilon, Oracle Data Cloud, Experian (yes, the credit reporting company). They buy data from thousands of sources; retailers, websites, apps, public records, credit card companies, and compile it into profiles. Then they sell those profiles to anyone willing to pay.

Your insurance company can buy your fitness tracker data and use it to deny claims. Your employer can buy your social media sentiment analysis before deciding whether to hire you. Political campaigns can buy your anxiety triggers. Scammers can buy your financial desperation indicators.

It’s legal. It’s unregulated. And it’s a multi-billion-dollar industry operating in the shadows while we argue about whether Facebook is “biased” against conservatives.

Why This Isn’t “Just Advertising”

Let me be crystal clear about why this is fundamentally different from every form of advertising that came before it.

Scale. A TV commercial reaches millions of people with the same message. A Facebook ad reaches millions of people with millions of individualized messages, each one tailored to that specific person’s psychology.

Precision. A billboard hopes to catch the attention of its target demographic. Facebook knows your exact triggers and exploits them with surgical precision.

Opacity. A TV ad is public. Everyone sees the same thing, and it can be analyzed, critiqued, held accountable. A Facebook ad is invisible to everyone except you. No oversight. No accountability. You can’t even necessarily prove you saw what you saw, because it might not exist anymore.

Bidirectionality. A magazine ad talks at you. Facebook watches you react, learns from that reaction, and adjusts in real-time. It’s an iterative process of behavior modification.

No meaningful consent. You “agreed” to this by clicking “I Accept” on a terms-of-service agreement you never read, written by lawyers to be deliberately incomprehensible, governing practices that didn’t exist when you first signed up. That’s not consent. That’s a legal fiction.

This isn’t advertising. This is mass psychological manipulation as a business model.

Cambridge Analytica wasn’t a bug in the system. It was the system working exactly as designed.

They built the infrastructure for information warfare. Then they sold tickets to anyone with a credit card.

Foreign adversaries. Domestic extremists. Political campaigns. Corporations. Scammers. Whoever.

The weapon exists. It’s legal. And it’s for rent.

Still think I’m being hyperbolic? That this is just “ads, but on the internet”?

Let me show you the body count.

Because this isn’t theoretical. This isn’t a slippery slope argument. People are dead. Democracies are fracturing. Societies are collapsing.

And we have receipts.

Part 3: The Body Count

This isn’t theoretical. This isn’t a slippery slope argument. People are dead. Democracies are dying. And we have receipts.

Let me show you what happens when you build infrastructure for psychological manipulation at scale, optimize it for engagement over truth, and sell access to the highest bidder.

Let me show you the cost.

Myanmar: Facebook Facilitated Genocide

In 2018, the United Nations published a report that used language international bodies almost never use. They said Facebook played a “determining role” in genocide.

Not “contributed to.” Not “was a factor in.” Determining.

That word choice was deliberate. That word choice was damning.

Here’s what happened.

The Setup

Myanmar (formerly Burma) spent decades under brutal military dictatorship. Then, starting in 2011, the country began a democratic transition. Political prisoners were released. Aung San Suu Kyi, (Nobel Peace Prize laureate, icon of peaceful resistance) was freed from house arrest. Elections were held. The world was optimistic.

The internet arrived during this transition. And for most people in Myanmar, “the internet” meant one thing: Facebook.

The company had launched “Free Basics” in the country, a program that provided free access to Facebook and a handful of other services, even if you couldn’t afford a data plan. Sounds generous, right? Connecting the unconnected. Bringing people online.

What it actually did was make Facebook synonymous with the internet for millions of people. If you asked someone in Myanmar to “go online,” they opened Facebook. If you wanted to share news, you posted it on Facebook. If you wanted to organize politically, you did it on Facebook.

Facebook became the information infrastructure for an entire country emerging from isolation. The digital town square. The place where Myanmar’s future would be debated and decided.

And Facebook had almost no one monitoring it.

In 2015, at the height of rising tensions between the Buddhist majority and the Rohingya Muslim minority, Facebook had two Burmese-speaking content moderators. Two. For a country of 54 million people, where Facebook was the primary communication platform.

Two people to moderate the digital town square of an entire nation on the brink.

The Hate Campaign

Buddhist nationalist groups began using Facebook to spread anti-Rohingya propaganda. Dehumanizing posts. Conspiracy theories about Muslim plots to take over the country. Incitement to violence barely disguised as political speech.

And Facebook’s algorithm, optimized for engagement, promoted it.

[EDITOR’S NOTE: I initially planned to include actual screenshots of the anti-Rohingya propaganda that flooded Facebook during the genocide. After searching through archived materials and academic sources, I realized two things: First, most researchers have deliberately chosen not to republish this content… and for good reason. Second, I don’t want that garbage contaminating my blog, even as evidence. The documentation that it existed and what it said is sufficient. The text examples included here come from UN reports and journalistic investigations. If you need more convincing that this happened, the sources are linked throughout.]

Some examples of this hate speech include:

“We must fight them the way Hitler did the Jews, damn kalars!” ( Kalars is a racial slur)

Claims Rohingya aren’t citizens and warnings about “Muslim invasion”

Rohingya man framed as an illegal immigrant

Comparisons to “dogs and maggots” Posts with 200+ comments calling for Rohingya to be “shot, killed, or permanently removed”

If you want to read Facebooks bullshit excuse for their inactivity, you can read it here.

Because rage drives clicks. Fear drives shares. Hatred drives engagement. And the algorithm doesn’t care why you’re engaged, only that you are.

The hate speech wasn’t hidden in dark corners of the internet. It was in mainstream feeds, recommended by the algorithm, amplified to millions. Prominent military figures used Facebook to spread disinformation. Buddhist monks used it to call for ethnic cleansing. Fake news about Rohingya violence went viral while fact-checks languished.

One widely-shared post claimed Rohingya Muslims were raping Buddhist women and plotting to impose Sharia law. Another showed graphic photos (later revealed to be from different conflicts entirely) and claimed they depicted Rohingya atrocities. These posts reached millions. They were shared hundreds of thousands of times.

Facebook’s recommendation algorithm discovered that anti-Rohingya content was excellent for engagement, so it served it to more people. The more people engaged, the more it spread. A feedback loop of hatred, algorithmically optimized.

Investigative journalists later found that searching for certain Rohingya-related terms on Facebook would trigger the algorithm to recommend increasingly extreme anti-Rohingya content. The platform was actively radicalizing users.

The Violence

In August 2017, the Myanmar military launched what they called “clearance operations” against Rohingya villages in Rakhine State.

Here’s what “clearance operations” actually means:

Mass killings. Gang rapes. Villages burned to the ground. Children thrown into fires. (I am not exaggerating) Families executed. Systematic ethnic cleansing.

The United Nations documented atrocities that included: shooting civilians indiscriminately, burning people alive in their homes, throwing babies into fires, gang-raping women and girls before killing them, beheading men in front of their families.

At least 25,000 Rohingya were killed (a conservative estimate. The real number is likely much higher). Over 700,000 fled to neighboring Bangladesh, where they still live in refugee camps. Entire villages were erased from the map.

The UN Fact-Finding Mission concluded that Myanmar military leaders should be prosecuted for genocide, crimes against humanity, and war crimes. The International Court of Justice agreed to hear the case. The evidence was overwhelming.

And Facebook? Facebook’s own investigation, the one they commissioned after the UN report shamed them; admitted that the platform had been used to “foment division and incite offline violence.”

The UN report was more direct. Here’s the actual quote:

“Facebook has been a useful instrument for those seeking to spread hate, in a context where, for most users, Facebook is the Internet.”

Let that sink in. A useful instrument. The United Nations, an organization that bends over backward to be diplomatic, essentially said Facebook was a weapon wielded by genocidaires.

Facebook’s Response

Facebook knew about the problem. They’d been warned. Multiple times.

In 2013, four years before the worst violence; researchers were already raising alarms about hate speech on the platform in Myanmar. In 2015, civil society groups explicitly told Facebook that anti-Rohingya rhetoric was escalating and posed a danger. In early 2017, human rights groups warned that violence was imminent and Facebook was making it worse.

Facebook’s response? Slow, inadequate, negligent.

They kept two Burmese-speaking moderators until 2015. By mid-2018, after the genocide had already happened and the UN report had been published, they had increased that number to 60. By the end of 2018, they claimed to have 99.

Ninety-nine moderators. For 54 million users. After 25,000 people were dead.

They claimed they were investing in artificial intelligence to detect hate speech. They claimed they were improving. They claimed they took the problem seriously.

But the violence had already happened. The damage was done.

In August 2018, after the UN report, Facebook finally removed the accounts of Myanmar’s military leaders, including Senior General Min Aung Hlaing; the commander-in-chief who oversaw the genocide. They removed military-affiliated pages that had millions of followers.

Two years too late.

And here’s the kicker: when human rights lawyers requested that Facebook preserve and share the deleted content as evidence for genocide prosecutions, Facebook refused. They cited user privacy concerns and “extraordinary” breadth of the request. Read about that here.

They facilitated genocide, then refused to help prosecute it.

The Aftermath

Facebook didn’t cause the genocide. The Myanmar military did. The Buddhist nationalists did. Decades of persecution and dehumanization created the conditions.

But Facebook built the megaphone. Facebook optimized the megaphone for maximum emotional impact. And then Facebook rented that megaphone to anyone who wanted to use it, including people actively organizing mass murder.

The UN investigators were unambiguous about this. Facebook wasn’t just a passive platform where bad things happened to occur. It was an active accelerant. The algorithm amplified hatred. The recommendation system radicalized users. The infrastructure enabled coordination of violence.

In 2020, Rohingya refugees filed a class-action lawsuit against Facebook for $150 billion, arguing the company’s negligence facilitated ethnic cleansing. Facebook’s response? The lawsuit is without merit. Section 230 protects them.

Twenty-five thousand dead. Seven hundred thousand refugees. Entire villages erased.

And Facebook’s legal position is: Not our problem. We just host the content.

That’s the power of Section 230. That’s what happens when platforms are immune from consequences.

2016 Election: Democracy as Attack Surface

Let’s talk about Russia.

Not because Russia “stole the election”; that’s unprovable and beside the point. Let’s talk about Russia because they proved something more terrifying: democracies are vulnerable to information warfare, and social media is the perfect weapon.

The Scale

In October 2017, Facebook General Counsel Colin Stretch testified before the Senate Judiciary Committee. He brought numbers. Big numbers.

Approximately **126 million Americans, (**nearly half the voting population) were potentially exposed to Russian-backed content on Facebook during the 2016 election cycle.

Let me break that down:

Russian operatives, working for an organization called the Internet Research Agency (IRA), created 120 fake Facebook pages. Those pages posted about 80,000 pieces of content between January 2015 and August 2017. That content was directly served to about 29 million Americans. But because of how Facebook works; because of sharing, liking, commenting; the reach tripled. 126 million.

And that’s just Facebook. Twitter found 36,746 automated accounts linked to Russia, which generated 1.4 million election-related tweets. Google found Russian-linked activity on YouTube and its ad platforms. The operation was multi-platform, coordinated, and sophisticated.

Russia spent approximately $100,000 on Facebook ads. A rounding error compared to the billions spent by actual campaigns. But the organic reach, the amplification provided by Facebook’s algorithm and users sharing the content, was worth millions, maybe hundreds of millions in advertising value.

They didn’t need to buy reach. Facebook gave it to them.

The Method

Here’s what the Russian operation looked like in practice:

They didn’t run “Vote for Trump” ads. That would be too obvious, too easy to trace. Instead, they focused on divisive social issues designed to inflame existing tensions.

They created fake Black activist pages like “Blacktivist” that posted about police brutality and racial justice; content designed to energize Black voters while simultaneously terrifying white conservatives.

They created fake conservative pages like “Being Patriotic” and “Heart of Texas” that posted about immigration, gun rights, and Christian values; content designed to radicalize the right.

They created fake Muslim pages. Fake LGBT pages. Fake veteran pages. They posed as both sides of every cultural flashpoint.

The goal wasn’t to elect Trump (though they clearly preferred him). The goal was chaos. Distrust. Division. Making Americans hate each other. Making democracy feel broken.

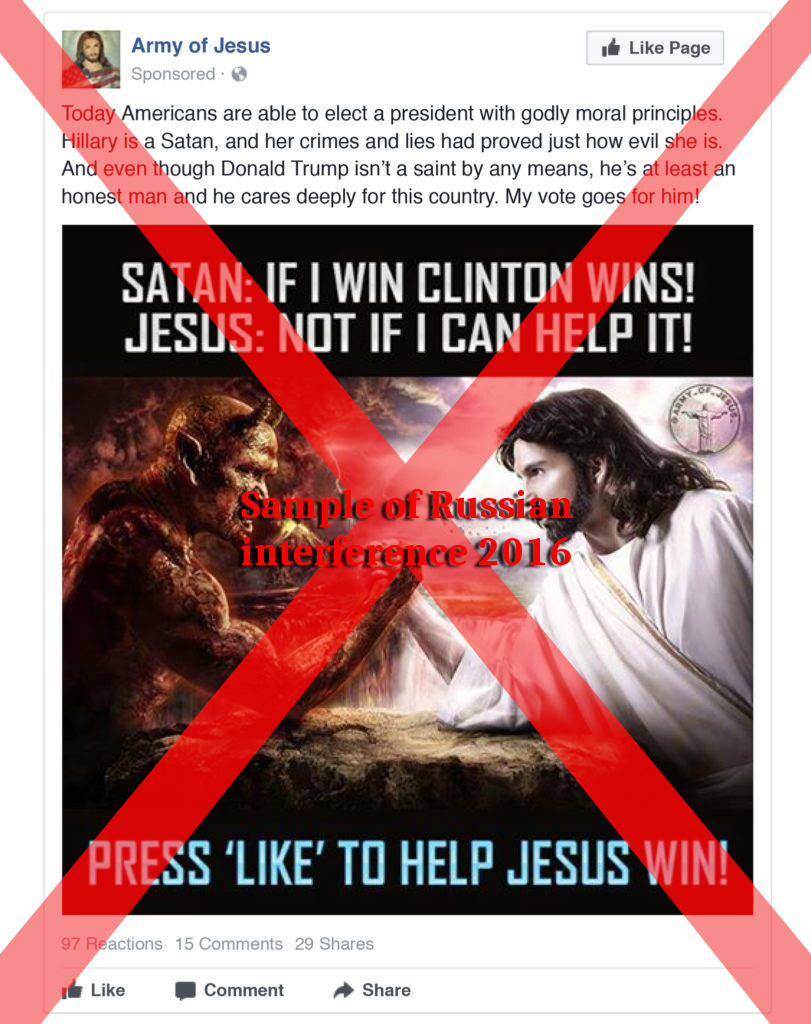

One of the most viral pre-election Facebook posts, shared over 340,000 times, was a cartoon of a gun-toting Yosemite Sam declaring he’d vote for Trump. It came from a Russian troll account.

On Instagram, the most successful Russian post was an image of Jesus Christ with the text “Press ‘Like’ to help Jesus win!” Over 300,000 people liked it.

Absurd? Yes. Effective? Also yes.

The Russians organized actual events through Facebook. They promoted opposing rallies at the same location, one pro-immigrant, one anti-immigrant, and watched Americans show up to yell at each other. They didn’t even have to be there. Facebook did the organizing for them.

After the election, the operation didn’t stop. Russian accounts promoted protests against Trump. Promoted pro-Trump rallies. Promoted conspiracy theories on both sides. The goal wasn’t picking a winner, it was making sure nobody trusted the system.

Cambridge Analytica: The Business Model Working as Designed

While Russia was running a psyops campaign, a British political consulting firm was running essentially the same operation; but legally, and for profit.

Cambridge Analytica harvested data from 87 million Facebook users through a personality quiz app called “This Is Your Digital Life,” created by a Cambridge University researcher named Aleksandr Kogan. About 270,000 people downloaded the app and took the quiz. But because of how Facebook’s API worked at the time, the app also collected data from all of those users’ Facebook friends, without their consent or knowledge.

For those so inclined, technical details here.

That’s how 270,000 downloads turned into 87 million profiles.

The data included:

- Profile information

- Page likes

- Birthday and location

- News feed content

- Private messages (in some cases)

- Photos and social connections

Cambridge Analytica used that data to build psychological profiles based on the OCEAN model (Openness, Conscientiousness, Extraversion, Agreeableness, Neuroticism). Then they sold those profiles, and the ability to target ads based on them, to political campaigns.

Ted Cruz’s presidential campaign hired them in 2015. When Trump secured the Republican nomination, his campaign started paying them in September 2016, right before the general election. They used the data to microtarget voters with psychologically tailored messages.

High in neuroticism? Here’s a fear-based ad about crime. Low in agreeableness? Here’s an attack ad demonizing Hillary Clinton. High in openness? Here’s a message about change.

Not generic political advertising. Individualized psychological interventions.

Cambridge Analytica whistleblower Christopher Wylie described it as “weapons-grade communications tactics.” (Read Christopher Wylie’s book on the scandal, it is horrifying. “MindF*ck” on Amazon. His book was partially responsible for this very article.) Steve Bannon, who sat on Cambridge Analytica’s board and later ran Trump’s campaign, called it “psychological warfare.”

And here’s the crucial point: This wasn’t a data breach. Facebook didn’t get hacked. Cambridge Analytica didn’t break into anything. They used Facebook’s platform exactly as it was designed to be used, to collect user data for targeted advertising.

Facebook’s terms of service prohibited sharing data with third parties, but the platform had no meaningful enforcement mechanism. When Facebook found out about the data sharing in 2015, they asked Cambridge Analytica to delete it. Cambridge Analytica said “sure,” and Facebook… believed them. No verification. No follow-up. No consequences.

The data wasn’t deleted. It was used to help elect the president.

When the story finally broke in March 2018, three years after Facebook learned about it, the company’s defense was essentially: “Well, technically this wasn’t a breach because users consented to the app’s data collection.”

Which is true, in the most pedantic possible sense. The 270,000 people who downloaded the app did consent. The other 86.7 million people whose data was harvested? They didn’t consent to shit.

Data goblins. Gobblin’ up your data.

[EDITORS NOTE: I feel that it is important to drop the specifics of just how shady the collection of the “other 86.7 million” users data was. SO, the 270,000 who actually downloaded the app (unbeknownst to them) authorized Facebook to ALSO collect ALL of the same data about them from ALL of the people in their friends list. THIS is how such a seemingly small sample size, led to such a large trove of data.]

Facebook’s stock dropped 24% in the week following the revelation; $134 billion in market value, evaporated. The FTC eventually fined them $5 billion (a record at the time, though still just a few months of profit).

Mark Zuckerberg took out full-page ads in major newspapers: “I’m sorry we didn’t do more at the time. We’re now taking steps to ensure this doesn’t happen again.”

Narrator: It kept happening.

The Broader Point

Russia’s operation and Cambridge Analytica’s operation both proved the same thing: The infrastructure for mass psychological manipulation exists, it’s incredibly effective, and anyone can use it.

You don’t need to be a sophisticated intelligence agency. You don’t need to hack anything. You just need money and a willingness to exploit the tools that Facebook has built.

Super technical sophisticated intelligence agency

The platform is the weapon.

Foreign adversaries can use it. Domestic extremists can use it. Political campaigns can use it. Scammers can use it. Anyone with a credit card and no conscience.

And it’s legal.

Section 230 says platforms aren’t liable for user content. So Facebook can build infrastructure purpose-designed for behavior manipulation, sell access to that infrastructure, and face no consequences when it’s used to undermine democracy.

That’s not an aberration. That’s the business model.

Teen Mental Health: The Kids Are Not Alright

While we were arguing about election interference and Russian bots, Facebook was running experiments on children.

And they knew exactly what they were doing.

The Internal Research

In September 2021, the Wall Street Journal published a bombshell series called “The Facebook Files,” based on internal company documents leaked by whistleblower Frances Haugen. Among the revelations: Facebook had conducted extensive research into Instagram’s effects on teenage mental health.

The research was damning. Devastating. Unambiguous.

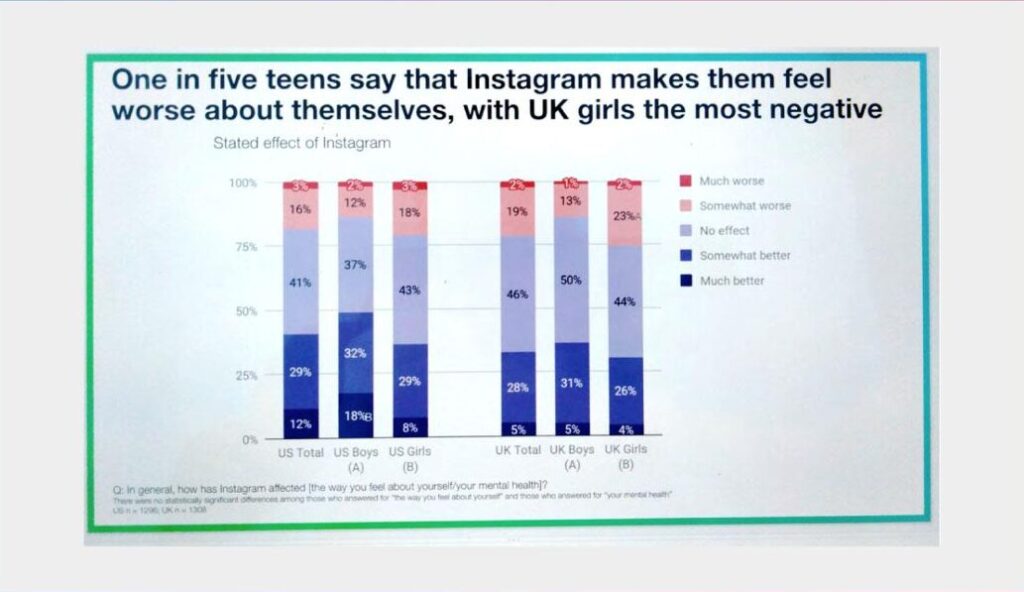

Here’s a slide from a March 2020 internal presentation, in Facebook’s own words:

“We make body image issues worse for one in three teen girls.”

Not “might make worse.” Not “some evidence suggests.” We make body image issues worse.

They knew.

Here are more findings from Facebook’s internal research:

- 32% of teen girls said that when they felt bad about their bodies, Instagram made them feel worse.

- Among teens who reported having suicidal thoughts, 13% of British users and 6% of American users traced those thoughts directly to Instagram.

- “Teens blame Instagram for increases in the rate of anxiety and depression. This reaction was unprompted and consistent across all groups.”

- “Social comparison is worse on Instagram” than on other platforms like TikTok or Snapchat.

- More than 40% of Instagram users who reported feeling “unattractive” said the feeling began on the app.

Facebook researchers studied this for three years. Multiple presentations. Deep dives. Focus groups. Surveys of tens of thousands of teens.

They didn’t stumble upon this information. They researched it. Thoroughly. Deliberately. They wanted to understand how their platform affected young users.

And then they buried it.

What Makes Instagram Specifically Toxic

Instagram isn’t just “social media in general.” Facebook’s own research showed that Instagram’s specific design choices make it uniquely harmful to adolescent mental health, particularly for girls.

Here’s why:

TikTok is performance-based. It’s silly dances, comedy sketches, creative editing. There’s a performative artifice to it, everyone knows it’s a show.

Snapchat uses filters that are obviously fake, cartoonish, silly. The augmented reality is playful, not aspirational.

Instagram is a curated highlight reel presented as reality. It’s filtered, edited, perfectly composed photos of impossibly beautiful people living impossibly perfect lives. And it’s presented as real; as documentation of actual existence, not performance.

Teenage girls look at Instagram and see:

- Bodies they can’t have

- Lives they can’t live

- Friends having fun without them

- Perfection they can never achieve

The algorithm makes it worse. The “Explore” page, Instagram’s recommendation system, learns what keeps you engaged and serves you more of it. If you pause on fitness content, you get more fitness content. Then more extreme fitness content. Then pro-anorexia content.

If you look at one post about weight loss, Instagram will lead you to eating disorder communities in three clicks.

Facebook’s researchers documented this. They found that the platform created “rabbit holes” where teens interested in dieting would be recommended increasingly extreme content about restriction, purging, and self-harm.

And the more time teens spent on Instagram, the worse they felt. But they couldn’t stop. Because Instagram is designed to be addictive, using the same dopamine-exploitation techniques as slot machines.

You get a like →dopamine hit. You don’t get a like →anxiety. You check again. And again. And again.

Facebook’s own researchers called it a “perfect storm” of features that combine to harm teenage mental health.

The Cover-Up

Here’s what makes this unconscionable: Facebook knew all of this, and they lied about it.

In March 2021, Mark Zuckerberg testified before Congress. Lawmakers specifically asked about Instagram’s effects on children. His response:

“The research that we’ve seen is that using social apps to connect with other people can have positive mental health benefits.”

That’s not just spin. That’s not careful corporate language. That’s a lie.

Facebook had research. Their own research, conducted by their own employees, showing the exact opposite. And Zuckerberg stood before Congress and said the opposite.

When confronted specifically about research showing harm to teen girls, Zuckerberg claimed the evidence was “mixed” and “not clear.” Meanwhile, internal presentations literally had slides saying “WE MAKE BODY IMAGE ISSUES WORSE.”

Facebook refused to share their research with academics who requested it. They refused to share it with lawmakers who specifically asked for it. When senators wrote to Facebook asking for data on Instagram’s effects on youth mental health, Facebook sent them a six-page letter that carefully avoided mentioning any of their actual findings.

In May 2021, Instagram’s head Adam Mosseri told the media that research on Instagram’s mental health impact was “quite small.”

Three months later, the Wall Street Journal published Facebook’s internal research showing the problem was massive, well-documented, and undeniable.

When the Journal story broke, Facebook’s response was to accuse the journalists of cherry-picking data and taking findings out of context. They published their own blog post titled “What Our Research Really Says About Teen Well-Being and Instagram,” where they argued that actually Instagram helped most teens who were struggling.

The gaslighting was extraordinary. “Sure, we make body image issues worse for one in three teen girls, but two out of three are fine, so really we’re helping!”

The Business Decision

Why did Facebook hide this research? Why did they lie to Congress? Why did they keep optimizing Instagram for engagement even after their own researchers documented the harm?

Because Instagram is wildly profitable, particularly among young users. And addressing the mental health crisis would require changing the core design of the platform, which would reduce engagement, which would reduce ad revenue.

Facebook was literally choosing profits over children’s mental health. Explicitly. With full knowledge of the consequences.

They knew 13% of British teens with suicidal thoughts traced those thoughts to Instagram. And they decided that was an acceptable cost of doing business.

They knew they were making body image issues worse for millions of teenage girls. And they kept optimizing the algorithm for engagement.

They knew the “Explore” page was creating radicalization pipelines toward eating disorder content. And they kept recommending it.

Frances Haugen, the whistleblower who leaked the documents, put it bluntly: “Facebook has realized that if they change the algorithm to be safer, people will spend less time on the site, they’ll click on less ads, they’ll make less money.”

The safety of children or billions in ad revenue. Facebook chose the money.

Every. Single. Time.

The Consequences

Teenage suicide rates in the United States began rising sharply around 2010, right when smartphones and social media became ubiquitous. The increase has been particularly pronounced among teenage girls.

The CDC reports that between 2010 and 2019, suicide rates among girls aged 10-14 tripled. For girls aged 15-19, rates increased by 70%.

Jean Twenge, a psychologist at San Diego State University who studies generational differences, found that teens who spend five or more hours a day on electronic devices are 71% more likely to have at least one suicide risk factor than those who spend less than an hour.

Jonathan Haidt, social psychologist at NYU, has documented what he calls “The Great Rewiring of Childhood“; the shift from play-based to phone-based childhood that coincided with rising rates of anxiety, depression, self-harm, and suicide among teens, particularly girls.

Correlation isn’t causation, of course. But when you have:

- Dramatic increases in teen mental health crises starting around 2010

- The simultaneous rise of smartphones and social media

- Facebook’s own research showing Instagram makes these problems worse

- Studies showing screen time correlates with mental health issues

…at some point, you’re not being skeptical, you’re being willfully blind.

And here’s the thing: We’re still running the experiment. Instagram is still optimized the same way. The algorithm still promotes harmful content. Teenagers are still being fed images and messages that make them hate themselves.

Facebook made minor tweaks; some parental controls, some content warnings, some nudges to “take a break.” The equivalent of putting a band-aid on a severed artery.

The platform that makes body image issues worse for one in three teen girls is still running, still growing, still profitable.

And Facebook is still lying about it.

The Epistemological Collapse

But here’s the thing that should terrify you more than any of the above: We can’t agree on what’s real anymore.

This isn’t about partisan disagreement. Democracies can handle that. People have argued about policy since democracy was invented. Left versus right, liberty versus equality, individual rights versus collective good; these are legitimate debates with no clear answers.

This is different.

This is about the complete breakdown of shared epistemology. We can’t agree on basic, verifiable facts. Not opinions. Facts. The stuff that should be beyond debate.

Did the 2020 election have widespread fraud? No. Every audit, every recount, every court case confirmed it was secure. But millions of Americans believe it was stolen.

Are vaccines safe and effective? Yes. Decades of research by thousands of scientists across hundreds of institutions confirm this. But vaccine hesitancy is now mainstream political position.

Is climate change real and caused by humans? Yes. 97% of climate scientists agree. But climate denial is policy in major political parties.

Is the Earth round? Yes, obviously, we’ve known this for 2,000 years. But Flat Earth has more adherents now than it did 20 years ago.

How did we get here?

**Algorithmic amplification of misinformation.**

The Mechanism

Here’s how it works:

Social media algorithms are optimized for engagement. Misinformation is more engaging than truth because it’s more emotionally provocative. Therefore, algorithms amplify misinformation.

Research by Sinan Aral at MIT studied the spread of news on Twitter. The findings: False news spreads six times faster than true news. Not because of bots, because of humans. We share emotionally engaging misinformation more readily than boring facts.

The algorithm learned this. So it serves us more misinformation.

YouTube’s recommendation algorithm has been particularly devastating. Researchers at Harvard’s Berkman Klein Center found that YouTube systematically recommends increasingly extreme content because extreme content drives watch time.

You start by watching a video about the moon landing. YouTube recommends a video questioning the moon landing. You watch it (because curiosity). YouTube recommends a video “proving” the moon landing was faked. You watch it. YouTube recommends a video about other government cover-ups. Soon you’re watching videos about chemtrails and lizard people.

This isn’t conspiracy theorizing about the algorithm. This is documented behavior. The recommendation system creates radicalization pipelines.

[EDITORS NOTE: Ever have to visit family and you have that one cursed uncle who refuses to believe that the moon landing was real?? Try Debunkbot! It gently lays out the facts and disputes the dis/misinformation. I don’t get paid for this, btw. It was made by MIT fellows.]

The Case Studies

**QAnon.** Started as a prank on 4chan. Became a mass movement because Facebook’s algorithm discovered that QAnon content drove massive engagement. So Facebook recommended it. QAnon groups grew to millions of members. Now we have sitting members of Congress who believe a cabal of Satanic pedophiles controls the government.

**Anti-Vax.** Used to be a fringe movement. Some hippies worried about “toxins,” some religious communities claiming exemptions. Then Facebook’s algorithm discovered that vaccine hesitancy content drove engagement. Anti-vax groups exploded. Now measles is back. Polio is threatening a comeback. We’re bringing back diseases we eradicated because Facebook made anti-science propaganda profitable.

**Flat Earth.** Was basically extinct as a belief system by the mid-20th century. Then YouTube’s recommendation algorithm started suggesting Flat Earth videos to people who watched space content (because “debunking” videos technically fall under similar categories). The movement grew exponentially. Not because people are dumber, because the algorithm is effective.

**Election Denial.** After the 2020 election, social media became a firehose of fraud allegations, conspiracy theories, and “stop the steal” content. Most of it was demonstrably false. Checked by dozens of fact-checkers, debunked in court, dismissed by Trump’s own appointees. Didn’t matter. The algorithm amplified it because it drove engagement. Result: millions of Americans believe a lie, culminating in January 6.

In each case, the pattern is the same:

- Fringe idea exists

- Algorithm discovers it drives engagement

- Algorithm recommends it to more people

- More people engage, algorithm recommends it more

- Feedback loop

- Fringe idea becomes mainstream

Why This Breaks Democracy

You cannot have democracy without shared epistemology.

Democracy requires:

- Agreement on how to determine facts (scientific method, journalism, expertise)

- Trust in institutions that verify information (courts, universities, press)

- Ability to compromise based on shared understanding of reality

When everyone lives in algorithmically-curated reality tunnels, seeing completely different “facts,” those requirements collapse.

How do you compromise with someone who thinks vaccines are poison and you think they’re lifesaving medicine? How do you find middle ground with someone who thinks the election was stolen and you think it was secure? How do you debate policy with someone who thinks climate change is a hoax and you think it’s an existential threat?

You can’t.

So politics stops being about policy and becomes about which reality you inhabit.

And once that happens, democracy is dead. You’re just arguing about which tribe gets to impose their hallucination on everyone else.

The Spain Parallel

Everyone screams “Hitler!” when they talk about Trump or MAGA or authoritarianism. I find that a bit lazy. The better historical analogy is Spain, 1936.

Spanish Civil War didn’t start with a dictator seizing power. It started with a society that couldn’t agree on basic facts. Republicans versus Nationalists. Church versus secular. Urban versus rural. Traditionalists versus modernists.

Everyone lived in their own reality. Everyone had their own “truth.” Institutions lost legitimacy. Violence became normalized. Political assassination. Street battles. Armed militias.

And then: war. Three years. 500,000 dead. Forty years of fascist dictatorship.

Spain didn’t have Facebook. They fractured the old-fashioned way. Through newspapers, radio, propaganda, and in-person radicalization.

We’re speed-running the same pattern, but with algorithmic acceleration.

Red states and blue states might as well be different countries. They have different media ecosystems, different facts, different realities. Jan 6 was either an attempted coup or “legitimate political discourse” depending on which algorithm you trust.

How does this end?

History suggests: badly.

Part 4: Why They Won’t Fix It

So why doesn’t Facebook just… stop?

Why not hire enough moderators? Why not tune down the engagement optimization? Why not stop amplifying hate speech and misinformation? Why not prioritize user wellbeing over ad revenue?

Simple answer: Because they can’t.

Not “won’t.” Not “don’t want to.” Can’t.

Let me show you why self-regulation is structurally impossible when surveillance advertising is your entire business model.

It’s The Profit Model, Stupid

Here’s the simple, brutal truth: Facebook cannot fix the problem without destroying the company.

This isn’t hyperbole. This is math.

In 2023, Meta (Facebook’s parent company) generated approximately $131 billion in revenue. Of that, roughly $118 billion came from advertising. That’s not a typo. When you account for their money-losing ventures like Reality Labs (the metaverse division that’s hemorrhaging $12 billion per year), advertising revenue is literally more than 97% of what keeps the company afloat.

Let’s be even more specific. In Q3 2024, Meta made $40.6 billion in total revenue. $39.9 billion of that, 98.3%, came from ads.

Not “a significant portion.” Not “most of their revenue.” Ninety-eight point three percent.

Google? About 80% of revenue from ads. Still dependent, but at least they have other businesses (cloud services, hardware, enterprise software). Meta? It’s surveillance advertising or nothing.

So when people say “Facebook should stop doing surveillance advertising,” what they’re actually saying is: “Facebook should voluntarily destroy 98% of their revenue stream.”

Now imagine you’re Mark Zuckerberg. Imagine going to your shareholders… The people who own the company, who demand returns on their investment, and saying:

“Hey everyone, I know our stock price is based on our advertising business, and I know that business made us $131 billion last year, but we’re going to stop doing it. Yeah, revenue will drop by 98%. Stock will crater. We’ll lay off most of our employees. But it’s the ethical thing to do.”

You’d be fired before you finished the sentence. The board would remove you. Shareholders would sue. Investors would revolt.

This isn’t about whether Mark Zuckerberg is a good person or a bad person (he’s probably neither. Just a person optimizing for the incentives he’s given. There’s no such thing as good and evil). This is about structural incentives.

Meta is a publicly traded company. By law, executives have a fiduciary duty to maximize shareholder value. Not “do good things.” Not “be ethical.” Maximize shareholder value. That’s the legal obligation.

And surveillance advertising maximizes shareholder value. Massively. Undeniably.

So they cannot stop. Not because they’re evil. Because the business model, the entire architecture of the company, depends on it.

The Diversification Mirage

“But wait,” you might say, “couldn’t they pivot? Diversify? Find other revenue streams?”

They’re trying. It’s not working.

**The Metaverse (Reality Labs):** Mark Zuckerberg is betting tens of billions on virtual reality and the “metaverse.” It’s losing $16 billion per year. Nobody wants it. Nobody asked for it. It’s a vanity project eating investor money while the core business, (advertising) keeps the lights on.

Subscriptions: Meta tried launching “Meta Verified“. A paid subscription tier. It flopped. Turns out people are not willing to pay $15/month for what they’ve gotten free for years. Especially when “free” still exists.

E-commerce: Instagram and Facebook have tried building shopping features. Some traction, but nowhere near enough to replace advertising revenue. And guess what powers their shopping recommendations? Surveillance data.

AI Tools: Meta is investing heavily in AI (chatbots, image generation, etc.). Great. How will they monetize it? Probably… advertising.

Every attempted diversification either fails or ends up depending on the same surveillance infrastructure.

The business model is surveillance. The company is surveillance. Asking Meta to stop surveillance advertising is like asking ExxonMobil to stop selling oil.

Sure, they could. But then what’s left? What is the company at that point?

The Stock Price Trap

Here’s the other structural problem: Meta’s stock price, currently hovering around $600-700 per share, market cap over $1 trillion; is based on growth projections. I hate trying to understand and/or explain money, but stay with me.

Wall Street doesn’t value companies based on what they’re making now. They value them based on what they will make in the future. Meta’s valuation assumes continued ad revenue growth, continued user growth, continued engagement growth.

If Meta announced they were abandoning surveillance advertising, the stock would collapse. Not by 10%. Not by 20%. By 50-70%. Maybe more.

And that’s not just rich investors losing money. That’s:

- Employee stock compensation evaporating

- Pension funds taking massive losses

- 401(k)s cratering for millions of Americans

- Economic ripple effects across the tech sector

I’m not saying “feel bad for Meta’s shareholders.” I’m saying the incentive structure makes reform structurally impossible.

Every CEO of every publicly traded company faces the same trap: short-term stock price matters more than long-term societal good. Quarterly earnings calls matter more than existential risks. Shareholder lawsuits are more immediate than moral hazard.

The system is designed to prevent the very reforms we need.

Every “Apology Tour” is Theater

Okay, so Meta can’t structurally reform. But surely when they get caught doing something egregious, facilitating genocide, harming children, enabling election interference; surely then they face consequences and make changes, right?

Let’s catalog the pattern.

2016: Russian Election Interference

The Scandal: Russia used Facebook to reach 126 million Americans with divisive content designed to undermine democracy.

Zuckerberg’s Initial Response (November 2016): Called the idea that Facebook influenced the election “a pretty crazy idea.”

After Evidence Became Undeniable (September 2017): “I regret that I dismissed these concerns.” Promised to protect election integrity. Promised to do better.

What Actually Changed: Minor policy tweaks. Some Russian accounts removed. No fundamental change to the ad targeting system that enabled it. No change to the algorithm that amplified it.

The Outcome: Russia did it again in 2018. And 2020. And continues doing it now.

2018: Cambridge Analytica

The Scandal: 87 million Facebook users had their data harvested and used for political manipulation.

Zuckerberg’s Response: Took out full-page newspaper ads apologizing. “I’m sorry we didn’t do more at the time. We’re now taking steps to ensure this doesn’t happen again.”

Testified Before Congress: Looked apologetic. Promised reforms. Said all the right things.

What Actually Changed: Some API restrictions (that were easy to work around). Slightly better disclosure about data sharing. No fundamental change to the surveillance business model. No penalties for executives. No criminal charges.

The Outcome: Data brokers still operate freely. Microtargeting still happens. The infrastructure that enabled Cambridge Analytica still exists.

2017: Myanmar Genocide

The Scandal: Facebook’s platform facilitated ethnic cleansing that killed 25,000+ people and displaced 700,000.

Facebook’s Response: Commissioned an independent report that concluded they were “too slow” to respond. Admitted the platform was used to “foment division and incite offline violence.”

What Actually Changed: Added some Burmese-speaking moderators (still totally inadequate). Removed some military accounts (years too late). Made vague promises about AI content moderation.

The Outcome: Similar patterns emerged in Ethiopia, India, and elsewhere. Facebook still operates in countries where it lacks adequate moderation capacity. The fundamental problem, engagement optimization amplifies hate, remains unchanged.

2021: Facebook Files / Instagram Teen Mental Health

The Scandal: Internal research showed Instagram makes body image worse for 1 in 3 teen girls and contributes to suicidal ideation. Facebook hid the research and lied to Congress.

Facebook’s Response: Claimed the WSJ reporting was “misleading.” Said they’re committed to teen safety. Announced they were “pausing” Instagram for Kids (a product literally no one wanted).

What Actually Changed: Some parental controls. Some content warnings. Some “take a break” reminders. The algorithm that promotes harmful content to teens? Still running.

The Outcome: Instagram is still optimized the same way. Teen mental health crisis continues. Facebook is still lying about the research.

The Pattern

Every scandal follows the exact same script:

- Evidence of harm emerges

- Facebook denies or minimizes

- Evidence becomes undeniable

- Executive looks sad on TV/takes out newspaper ads

- “We take this seriously” / “We’ll do better”

- Announce minor policy changes

- Maybe hire a few more moderators (still inadequate)

- Wait for news cycle to move on

- Resume business as usual

- Repeat when next scandal breaks

It’s not reform. It’s public relations damage control.

And it works! Because there are no real consequences. Congress yells at them for a few hours. They apologize. Their stock goes up. They keep making billions.

Why would they actually change?

The Zuckerberg Testimony Industrial Complex

Mark Zuckerberg has testified before Congress multiple times. Each time, it’s the same performance:

- Wears a suit (instead of his usual hoodie) to look “respectful”

- Sits on a booster seat (seriously) to appear taller/more commanding

- Gives carefully rehearsed non-answers

- Occasionally looks confused by basic questions from tech-illiterate senators

- Promises to “follow up” with specific answers (which arrive months later as dense PDFs no one reads)

- Walks away with zero consequences

Senators get to look tough for their constituents. Facebook gets to look contrite. Nothing changes.

It’s theater. It’s always been theater.

The Regulatory Capture

“But surely,” you might think, “the government will step in and regulate them?”

Oh, sweet baby Jesus.

The government is owned by the tech companies.

Not through cartoonish corruption. No briefcases of cash changing hands in dark alleys. Through the boring, legal kind of corruption: lobbying, campaign contributions, and the revolving door.

The Lobbying Numbers

The tech industry now spends more on lobbying than Big Oil. Let that sink in. More than the fossil fuel companies that have been perfecting regulatory capture for a century.

In 2023:

- Meta spent ~$20 million on lobbying

- Amazon spent ~$21 million

- Google spent ~$12 million

- Apple spent ~$9 million

That’s per company, per year. And that’s just the disclosed lobbying. It doesn’t count:

- “Educational” donations to think tanks

- Funding for academic researchers (who mysteriously always find tech-friendly results)

- Campaign contributions

- Industry associations and trade groups

- “Consulting fees” to former government officials

That money buys influence. It buys access. It buys friendly legislation (or more accurately, the absence of unfriendly legislation).

When Congress considers tech regulation, guess who’s in the room helping draft the bill? Tech industry lobbyists. Guess who’s providing “technical expertise” to Congressional staffers who don’t understand the technology? Tech companies.

It’s regulatory capture 101.

The Revolving Door

Here’s how it works:

Government → Tech:

- Government official regulates tech companies

- Leaves government position

- Immediately hired by tech company for 10x their government salary

- Or joins tech-funded think tank

- Or becomes “consultant” for multiple tech firms

Tech → Government:

- Tech executive wants political influence

- Takes government position (often at financial loss, but worth it for the access)

- Writes favorable policy

- Returns to tech sector with expanded network and influence

Examples:

- Sheryl Sandberg (Facebook COO): Former Chief of Staff to Treasury Secretary

- Jay Carney (Amazon VP): Former White House Press Secretary

- Megan Smith (Google VP): Became U.S. Chief Technology Officer

- Nicole Wong (Google, Twitter legal): Became Deputy U.S. Chief Technology Officer

The list goes on. And on. And on.

When the people regulating tech companies are the same people who used to work for (or will soon work for) those companies, is it any wonder the regulations are toothless?

The “Innovation” Shield

Every time regulation is proposed, the tech industry deploys the same talking points:

“Don’t stifle innovation!” As if allowing surveillance advertising is the only way to innovate.

“American competitiveness!” As if regulating Facebook would somehow let China “win” (whatever that means).

“Let the market decide!” As if markets work when consumers don’t understand what they’re buying (spoiler: you’re not buying anything, you’re being sold).

“Unintended consequences!” The ultimate thought-terminating cliché. Yes, regulation might have unintended consequences. You know what definitely has consequences? Unregulated surveillance capitalism destroying democracy.

And these talking points work. Because they sound reasonable. Because politicians are scared of being blamed if regulation somehow hurts the economy. Because “innovation” has become a sacred cow that can’t be questioned.

Meanwhile:

- Section 230 has been essentially unchanged since 1996

- No comprehensive federal privacy law exists

- The FTC is underfunded and outgunned

- Antitrust enforcement is a joke

- Fines are a fraction of quarterly profits

The regulatory environment is a moat protecting tech companies from accountability.

The Bipartisan Failure

And the worst part? It’s bipartisan!

Republicans won’t regulate because: “Free market! Small government! Don’t pick winners and losers!” (Also, tech companies donate to their campaigns too.)

Democrats won’t regulate because: Tech companies are major donors to Democratic candidates. Silicon Valley is a key part of the coalition. And many Democrats genuinely believe in the tech-utopian vision.

Sure, occasionally you get bipartisan anger. Both sides hate Facebook for different reasons (Republicans: “censorship of conservatives!”; Democrats: “misinformation and hate speech!”). But they can’t agree on what the problem is, let alone how to fix it.

So we get Congressional hearings where senators yell at Zuckerberg for a few hours, everyone feels satisfied that “something” happened, and then… nothing happens.

The occasional bills that do get proposed are either:

- So toothless they’re meaningless

- So poorly written they’d be counterproductive

- So partisan they have no chance of passing

Actual, meaningful tech regulation? Not happening. Not under this system.

Historical Precedent: Industries Never Self-Regulate

This has happened before. Repeatedly. The playbook is always the same.

Let’s speedrun through history.

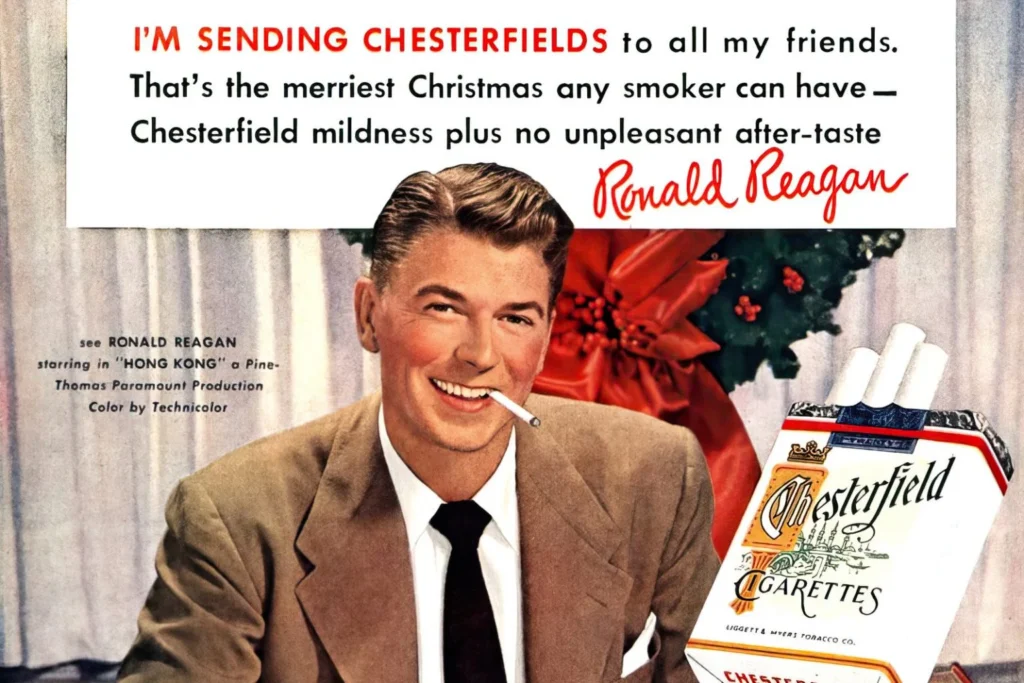

Big Tobacco

What They Knew: Cigarettes cause cancer. Smoking kills. They knew this in the 1950s. Their own research proved it.

What They Did:

- Buried the research

- Funded fake studies casting doubt on the cancer link

- Marketed to children (literally; candy cigarettes, cartoon mascots)

- Fought every regulation tooth and nail

- Lied under oath before Congress

- Only stopped when forced by massive lawsuits and government regulation

How Long It Took: From “we know it causes cancer” (1950s) to meaningful federal regulation (1990s-2000s): 40+ years

Did they self-regulate? No. Never. Not once. Not until legally forced.

Big Oil / Climate Change

What They Knew: Burning fossil fuels causes climate change. They knew this in the 1970s. ExxonMobil’s own scientists predicted it with stunning accuracy.

What They Did:

- Buried the research

- Funded climate denial think tanks

- Seeded doubt in public discourse (“the science isn’t settled!”)

- Lobbied against environmental regulation

- Bought politicians

- Still doing all of this, today, right now

How Long It Took: From “we know climate change is real” (1970s) to… we’re still fighting about it. 50+ years and counting.

Did they self-regulate? Are you kidding? They’re literally still funding climate denial while their internal documents show they knew the truth for decades.

Lead Industry

What They Knew: Lead is a neurotoxin. It causes brain damage, especially in children. They knew this from the beginning. It was obvious.

What They Did:

- Put lead in gasoline anyway (more profit!)

- Put lead in paint anyway (more profit!)

- Funded research claiming lead was safe

- Fought regulation for decades

- Only stopped when forced by lawsuits and bans

How Long It Took: Lead was recognized as toxic in ancient Rome. We didn’t ban it from gasoline until the 1970s, and from paint until 1978. Literal millennia of knowing + decades of modern regulation to finally stop.

Did they self-regulate? What do you think?

The Pattern

Every industry follows the same playbook:

- Discover product causes harm

- Internal research confirms it

- Bury the research

- Publicly deny the harm

- Fund counter-research to sow doubt

- Fight any regulation

- Lobby politicians

- Wait for public to forget

- Repeat until forced to stop by law

Big Tech is following this playbook exactly.

Facebook’s internal research shows Instagram harms teens? Bury it. Deny it. Say “the research is mixed.” Fight regulation. Lobby Congress. Wait for the next scandal to distract everyone.

It’s not a conspiracy. It’s just capitalism. Industries do not self-regulate. Not out of malice (though that helps). Out of structural incentive.

Shareholder value demands profit maximization. Safety is a cost. Regulation is a cost. Therefore: minimize safety, fight regulation, maximize profit.

That’s not evil. It’s just math.

And that’s why regulation is the only thing that ever works.

The Fiduciary Duty Trap

Here’s the legal/structural issue that makes self-regulation impossible:

Publicly traded companies have a fiduciary duty to their shareholders. This is not optional. It’s not a guideline. It’s law.

What does “fiduciary duty” mean? In practice, it means: maximize shareholder value.

Not “be a good corporate citizen.” Not “balance profit and social good.” Maximize shareholder value.

If executives make decisions that prioritize ethics over profit, they can be sued by shareholders. And they will be. Activist investors will argue that management is breaching fiduciary duty by leaving money on the table. Read that again:

If executives make decisions that prioritize ethics over profit, they can be sued by shareholders.

So when Facebook’s leadership looks at the question “should we stop surveillance advertising even though it harms society?” the answer is predetermined by legal structure:

“Would stopping it maximize shareholder value?”

No? Then we can’t do it. Fiduciary duty says so.

This is why people who say “Facebook should just be more ethical” don’t understand the system. Facebook isn’t a person. It’s not an entity that can “choose” to be ethical. It’s a machine optimizing for shareholder returns.

Asking Facebook to voluntarily stop surveillance advertising is like asking a shark to become vegetarian. The shark isn’t evil. It’s just a shark. It does what sharks do.

Facebook does what publicly traded surveillance-advertising companies do: surveil and advertise.

The only way to change that is to change the rules that govern what companies are allowed to do.

Which brings us to…

If they can’t self-regulate, and government is captured, what the hell do we do?

Here’s where I’m going to tell you something that might actually work.

Not because it’s easy. Not because it’s likely. But because it’s possible, it has precedent, and most importantly: it attacks the profit model directly.

You can’t fix Facebook by asking nicely. You can’t fix it with Congressional hearings. You can’t fix it with minor policy tweaks.

You can only fix it by banning the thing that makes them money.

Let me show you how.

Part 5: The Solution (That Could Actually Work)

Okay. Deep breath.

We’ve established:

- Surveillance advertising is psychological warfare

- It’s causing measurable harm (genocide, election interference, teen suicide)

- Companies structurally cannot fix it themselves

- Government is captured by tech lobbying

So what the hell do we do?

Here’s the answer, and it’s simpler than you think: Ban surveillance advertising.

Not “fix the algorithm.” Not “moderate better.” Not vague calls for “accountability” or “transparency.”

Ban the collection and use of personal data for behavioral advertising. Full stop.

Let me show you why this is the right target, how it would work, and why it’s actually politically possible.

The Specific Ask

When I say “ban surveillance advertising,” here’s exactly what I mean:

What Would Be ALLOWED:

Contextual Advertising:

- Car ad on a car website

- Running shoes ad in a fitness magazine

- Recipe ingredients ad on a cooking blog

- Based on what you’re currently reading/watching, not who you are

First-Party Data:

- “You bought from us before, here’s a related product”

- “You’re signed into our service, here are relevant recommendations”